API Testing in Software Development: Types, Strategies & Best Practices (2025)

Learn API testing in software development with types, strategies, and best practices. Ensure reliability, security, and performance of your APIs with modern testing tools.

Read more

All Articles

API Design Principles: Ultimate Guide to Building Better APIs

Master the 7 essential API design principles that make APIs intuitive, secure, and easy to test. Learn consistency, simplicity, and error handling best practices.

api design principles

Google Gemini Advanced Now Free for Students – How to Access It

U.S. college students can access advanced AI tools for coding and research for free, enhancing their skills and productivity.

Google Gemini Advanced

How to Get a Rugcheck API Key and Start Using the API

Learn how to secure your tokens from scams using a powerful API that automates risk detection and enhances application security.

Rugcheck API

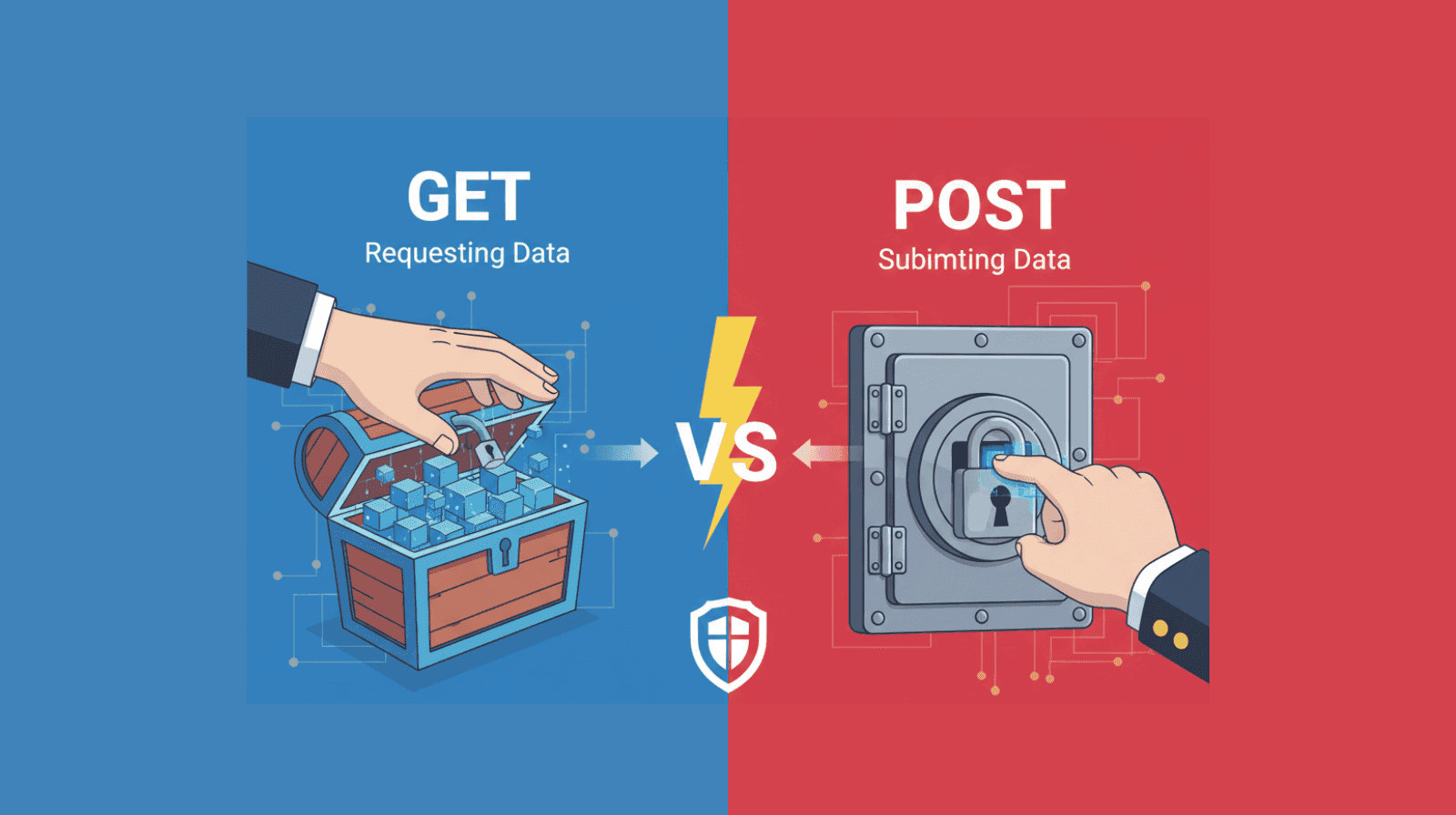

GET vs POST: Key Differences & Examples (2026)

Learn when to use GET vs POST, with security, caching, Content-Type tips, and CI/CD examples for reliable APIs

GET

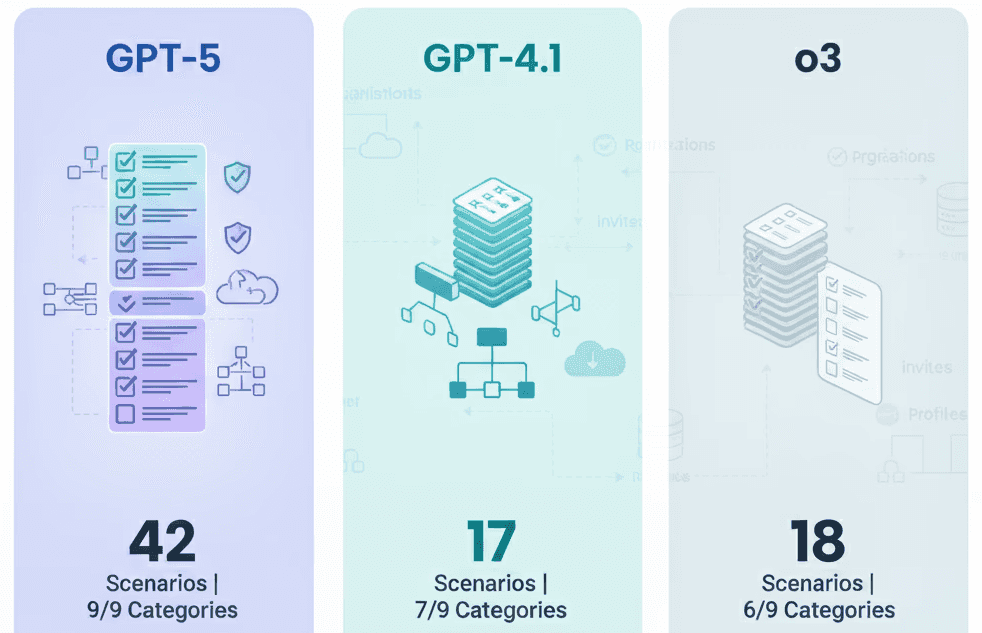

GPT-5 vs O3 vs GPT-4.1, Which one is better for Integration Testing

Compare GPT-5, GPT-4.1 & o3 for integration testing with detailed scenarios on auth, validation, headers, and edge cases.

GPT-5 integration testing

Postman Explained: A Beginner’s Guide

Postman is a widely used tool for API development and testing. It helps developers send API requests, view responses, and troubleshoot issues through a simple interface. Teams often use it to organize API requests into collections, automate basic tests, and create shared workspaces for collaboration.

Postman tutorial for beginners

Best Free Google AI Tools to Boost Your Projects

Explore the best free Google AI tools to enhance your projects, from content creation to machine learning and API testing.

Google AI tools

5 Ways to Use Cursor AI for Free

Explore five effective ways to leverage a free AI tool for enhancing coding productivity, from API testing to debugging and automation.

Cursor AI

Security Testing Tools and its Types

Explore essential security testing tools and their types, including SAST, DAST, and IAST, to protect your software from vulnerabilities.

security testing

API Security Checklist: 12 Steps to a Secure API

Secure your APIs with this comprehensive 12-step checklist, covering authentication, data protection, monitoring, and more.

API security

8 API Testing Tools You Should Know

Explore essential API testing tools that enhance reliability, security, and performance in modern software development workflows.

API testing

API Security Best Practices for 2026: Gateway/WAAP, OAuth 2.1, Workload Identity & CI/CD Recipes

Secure APIs in 2026 with gateway/WAAP, OAuth 2.1 + DPoP/mTLS, workload identities, schema validation, and CI/CD gates—plus ready-to-paste playbooks.

API security