Why Record & Playback Fails for Scalable Test Automation

Summary

Record and playback testing, despite its appealing simplicity, falls short in enterprise environments due to critical limitations. The approach skips crucial test design planning, leading to unstructured and hard-to-maintain test suites. Technical issues like poor modularity, script brittleness, and unreliable element identification make these tools impractical for complex applications. Implementation challenges, including inadequate error handling and debugging capabilities, further compound the problems. For enterprises, this translates to poor ROI and high maintenance costs, making it essential to invest in more robust testing approaches that prioritize design, modularity, and long-term sustainability.

Introduction

In the fast-paced world of software testing, who wouldn't want a magic wand that automatically creates test scripts? That's exactly what record and playback testing promises – a seemingly perfect solution where you record your actions and play them back as automated tests. Sounds too good to be true, right?

Well, as it turns out, it often is.

While record and playback tools might seem like the perfect shortcut for enterprise testing needs, they're a bit like using a bicycle for a cross-country journey. Sure, it'll get you started, but you'll quickly realize it's not the most practical long-term solution.

Think about it – major enterprises handle complex applications, multiple integrations, and frequent updates. They need testing solutions that can keep up with their scale and speed. Record and playback tools, despite their appealing simplicity, often create more problems than they solve in these environments.

The challenges? They range from maintenance nightmares to reliability issues that can bring your testing efforts to a grinding halt. But before you commit to this approach, let's dive deeper into why record and playback might not be the silver bullet you're looking for in enterprise test automation.

The Design-First Problem

Imagine building a house without a blueprint. Sounds risky, right? That's exactly what happens with record and playback testing. You're jumping straight into creating test scripts without laying the proper foundation of test design.

Here's the real problem: When you start recording tests immediately, you're essentially putting the cart before the horse. You're capturing actions without thinking about:

How these tests will fit into your overall testing strategy

What test scenarios need automation

How to structure your tests for long-term maintenance

Let's put this in perspective. Would you trust a development team that starts coding without any design or architecture planning? Probably not. Yet, many teams fall into this exact trap with record and playback testing, thinking it will save time.

The consequences? You end up with a collection of disconnected test scripts that:

Don't follow any unified testing strategy

Miss critical test scenarios

Become increasingly difficult to maintain

Lack of proper structure for scaling

The solution isn't to record first and think later. Just like software development needs proper architecture and design, test automation needs thoughtful planning and structure before implementation. This design-first approach might take more time initially, but it saves countless hours of maintenance and rework down the line.

Core Technical Limitations

Think of record and playback scripts like a one-take movie scene - if anything goes wrong, you have to start all over again. While this might work for simple scenarios, it creates major headaches in enterprise environments.

The Modularity Puzzle

Record and playback tools create scripts that are about as flexible as a steel rod. Here's what makes them problematic:

Tests run like a single long story with no chapters or breaks

You can't easily reuse common actions (like login steps) across different tests

Making changes feels like trying to replace a single card in a house of cards

Let's say you need to test five different features that all require logging in first. Instead of having one reusable login module, you end up with the same login steps copied across five different recordings. When does your login page change? You're updating five scripts instead of one.

When Scripts Break

Remember that House of Cards comparison? Here's where it really shows:

Change one tiny element in your UI? Your entire test might fail

Tests depend heavily on specific conditions present during the recording

Even small updates to your application can cause multiple test failures

The real kicker is when your application undergoes regular updates. Each change becomes a game of "find and fix the broken scripts" - not exactly the efficiency boost you were hoping for.

Want a real-world example? Imagine recording a test for an e-commerce checkout process. The script captures exact dollar amounts, specific product names, and particular dates. When prices change or new products are added, your test breaks. With proper parameterization and modular design, these changes would be simple updates rather than complete rewrites.

Implementation Challenges

Let's dive into the real-world hurdles that make record and playback testing a headache for enterprise teams.

Finding the Right Elements

Ever played "Where's Waldo?" with web elements? That's what record and playback tools often do. Here's the problem:

They rely on basic attributes like IDs and names that often change

Modern frameworks use dynamic elements that confuse these tools

Single-page applications and complex UIs often break recorded scripts

For example, imagine your app uses React with dynamically generated class names. Your record and playback tool might latch onto these temporary identifiers, making your tests as reliable as a chocolate teapot.

When Things Go Wrong

The biggest issue? Record and playback tools are terrible at handling the unexpected. Think about these scenarios:

A loading spinner appears longer than usual

A popup message shows up unexpectedly

Network delays cause elements to load slowly

Your recorded script will likely fail in all these cases, leaving you with cryptic error messages and no clear path to fix the issue.

Here's what you're missing:

Smart wait mechanisms

Proper error reporting

Clear debugging information

Recovery strategies for common failures

The reality is, in enterprise environments, these aren't edge cases – they're daily occurrences. Without proper error handling and debugging capabilities, you'll spend more time investigating failures than actually improving your test coverage.

Business Impact

Let's talk money and time - the two things record and playback testing promises to save but often end up consuming more.

The Real Cost Story

On paper, record, and playback looks like a budget-friendly option: minimal training, quick start, and instant results. But here's what actually happens in enterprise environments:

Teams spend more time fixing broken tests than creating new ones

Test maintenance becomes a full-time job for multiple team members

Critical bugs slip through due to unreliable test results

Think about it: You might save 2 hours today by quickly recording a test but spend 10 hours next week fixing it when your application updates.

The Maintenance Money Pit

The numbers don't lie. Here's what typically happens in enterprise settings:

60-70% of the testing time goes to maintenance rather than new test creation

Multiple team members are needed just to keep existing tests running

Constant fixes are required after each application update

Enterprise-Level Reliability Concerns

In enterprise environments, unreliable tests aren't just annoying - they're expensive. Consider these impacts:

Failed deployments due to flaky tests

Delayed releases because of false test failures

Lost developer time investigating test failures that aren't actual bugs

Decreased confidence in the testing process

The bottom line? While recording and playback might seem like a cost-effective solution initially, it often becomes a resource drain that affects your entire development pipeline. For enterprises seeking scalable, reliable test automation, it's crucial to look beyond the apparent simplicity of record and playback tools.

Conclusion

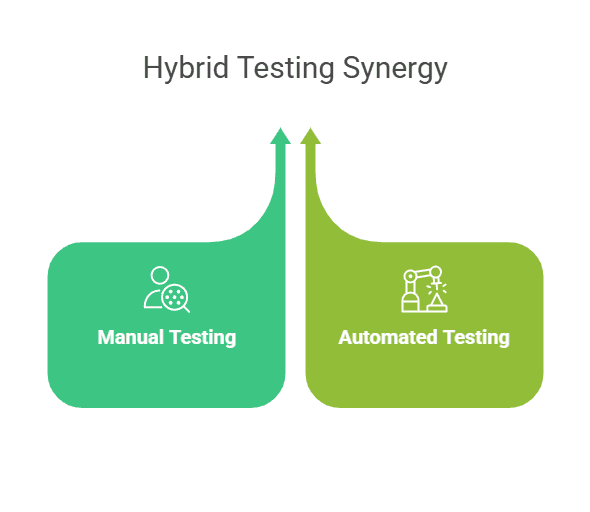

Record and playback testing is like a starter car - great for learning but not ideal for the long haul. For enterprises looking to build scalable, reliable test automation, it's time to look beyond quick-fix solutions.

Instead of falling for the "quick & magical" promise, focus on building a robust testing foundation. This means investing in proper test design, modular frameworks, and reliable automation tools that can grow with your needs.

The future of enterprise testing isn't about quick wins - it's about sustainable success.

Frequently Asked Questions

Why should you choose Qodex.ai?

Qodex.ai simplifies and accelerates the API testing process by leveraging AI-powered tools and automation. Here's why it stands out:

- AI-Powered Automation

Achieve 100% API testing automation without writing a single line of code. Qodex.ai’s cutting-edge AI reduces manual effort, delivering unmatched efficiency and precision.

- User-Friendly Platform

Effortlessly import API collections from Postman, Swagger, or application logs and begin testing in minutes. No steep learning curves or technical expertise required.

- Customizable Test Scenarios

Whether you’re using AI-assisted test generation or creating test cases manually, Qodex.ai adapts to your needs. Build robust scenarios tailored to your project requirements.

- Real-Time Monitoring and Reporting

Gain instant insights into API health, test success rates, and performance metrics. Our integrated dashboards ensure you’re always in control, identifying and addressing issues early.

- Scalable Collaboration Tools

Designed for teams of all sizes, Qodex.ai offers test plans, suites, and documentation that foster seamless collaboration. Perfect for startups, enterprises, and microservices architecture.

- Cost and Time Efficiency

Save time and resources by eliminating manual testing overhead. With Qodex.ai’s automation, you can focus on innovation while cutting operational costs.

- Continuous Integration/Delivery (CI/CD) Compatibility

Easily integrate Qodex.ai into your CI/CD pipelines to ensure consistent, automated testing throughout your development lifecycle.

How can I validate an email address using Python regex?

You can use the following regex pattern to validate an email address: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$

What is Go Regex Tester?

Go Regex Tester is a specialized tool for developers to test and debug regular expressions in the Go programming environment. It offers real-time evaluation of regex patterns, aiding in efficient pattern development and troubleshooting

Discover, Test, & Secure your APIs 10x Faster than before

Auto-discover every endpoint, generate functional & security tests (OWASP Top 10), auto-heal as code changes, and run in CI/CD - no code needed.

Related Blogs