How AI Enhances DevOps Monitoring

AI is transforming DevOps monitoring by making it smarter, faster, and more efficient. Traditional monitoring reacts to problems after they occur, but AI enables predictive tracking to identify and fix issues before they impact users. Here’s what you need to know:

Proactive Problem Solving: AI detects anomalies, predicts failures, and reduces downtime by up to 25%.

Faster Issue Resolution: Smart alerts and real-time log analysis cut the mean time to resolution (MTTR).

Dynamic Thresholds: AI automatically adjusts alert thresholds, reducing false positives by over 60%.

Self-Updating Systems: AI-powered tools streamline testing, deployment, and scaling, saving time and reducing errors.

Improved Security: AI automates API discovery, generates security tests, and ensures compliance with OWASP standards.

AI-Infused DevOps Monitoring: A Winning Combination | ODFP297

AI Anomaly Detection in DevOps

Traditional monitoring systems often rely on rigid thresholds, which can miss subtle irregularities or generate excessive false alarms. AI changes the game by learning what "normal" looks like and automatically flagging deviations that might escape human attention.

AI-driven anomaly detection doesn’t just stop at basic threshold monitoring. These systems establish baselines for normal operational behavior and trigger alerts only when genuine anomalies arise [5]. Instead of overwhelming teams with unnecessary alerts, AI correlates multiple events to uncover root causes rather than focusing on isolated symptoms [5].

What’s more, AI improves with every incident, refining its accuracy over time. This reduces false positives and allows teams to concentrate on solving real problems [5]. These advancements set the stage for more sophisticated log analysis, as explored below.

Real-Time Log Analysis and Error Detection

Log data is expanding at an astonishing rate - 50 times faster than business data [10]. Manually analyzing this flood of information is no longer feasible. AI steps in to simplify the process, automatically detecting unusual patterns, trends, or deviations from expected behavior [11].

Machine learning models excel at processing massive data sets, uncovering hidden patterns far faster and with greater precision than human teams [10]. This speed advantage plays a critical role in reducing Mean Time to Resolution (MTTR), as early warning signs can be identified and addressed before they escalate into major failures [10].

For instance, AI can analyze logs and metrics to predict potential system failures or performance issues, enabling proactive maintenance and quicker problem resolution [4]. Imagine an e-commerce platform facing a surge in traffic during a sale. An AI system could pinpoint bottlenecks within seconds and take corrective action, ensuring customers have a seamless shopping experience. Beyond solving immediate issues, AI learns from these incidents, enhancing its ability to prevent similar problems in the future [6].

Dynamic Threshold Setting

Dynamic threshold setting is another way AI improves monitoring accuracy. Static thresholds often fall short during traffic spikes, seasonal fluctuations, or system updates, creating unnecessary challenges for DevOps teams. Dynamic thresholds address this by continuously adjusting alert parameters based on real-time system behavior [6].

For example, Validio’s research shows that dynamic thresholds can cut false positives by over 60% [7]. These thresholds adapt to seasonal trends and intricate data patterns [7]. Consider disk write time monitoring: a static threshold of 20 milliseconds might generate numerous false positives as disk usage increases. However, adaptive thresholds recalibrate daily, using data from the past week, to avoid such issues [8].

AI-powered alerting also learns to distinguish between normal and abnormal spikes [5]. Instead of flagging every traffic increase, the system sends alerts only when spikes are paired with other concerning signals, such as rising error rates or failed transactions. Dynamic thresholds further mitigate the limitations of poorly tuned static thresholds by reducing excessive notifications while ensuring critical alerts are not missed [9]. This creates a more dependable monitoring environment for DevOps teams, allowing them to focus on what truly matters.

Predictive Monitoring for Early Issue Resolution

Anomaly detection is great at catching problems as they happen, but predictive monitoring takes it a step further by forecasting issues before they occur. This forward-thinking approach changes the game for DevOps teams, shifting them from constantly reacting to problems to proactively preventing them. It lays the groundwork for strategies like failure prediction and automatic scaling, helping teams stay ahead of potential disruptions.

AI plays a central role here, analyzing both historical and real-time data to spot patterns and outliers that might signal trouble ahead [12]. What makes this so powerful is AI's ability to detect subtle changes in system behavior - things that would fly under the radar for humans. Often, these early warning signs can be identified hours or even days before a system failure happens [13].

According to IDC research, organizations using AI-powered monitoring have seen unplanned outages drop by 25% [12]. This improvement comes from AI's ability to process massive amounts of data across development stages, making failure predictions more accurate [14].

"Predictive monitoring is transforming enterprise operations by combining the latest technologies with strategic implementation. By preventing issues before they escalate through early detection, enhancement of reliability and better performance optimization, organizational efficiency can be significantly improved."

– Hrushikesh Deshmukh, Senior Consultant, Fannie Mae [12]

Failure Prediction Models

AI-driven failure prediction models are all about using past data to predict future breakdowns and suggest ways to avoid them. These models are particularly effective at analyzing large datasets to uncover patterns that typically precede system failures, giving teams the insights they need to act before things go wrong.

A great example of this is Netflix, which uses AI-powered predictive monitoring to analyze billions of daily metrics. This helps them detect potential service disruptions and ensure their platform runs smoothly [12][13]. Netflix even takes it a step further by using tools like Chaos Monkey to deliberately introduce faults into its systems. By studying how their architecture handles these disruptions, they can pinpoint weak spots and address them before they become real problems [13].

Predictive analytics can also significantly boost defect detection. A Capgemini report suggests that these tools can improve detection rates by up to 45% [14]. AI achieves this by analyzing historical deployment data to predict potential issues, recommend optimal deployment times, and even trigger alerts or corrective actions before users are affected [17].

For these models to work effectively, organizations need to prioritize high-quality data collection, thorough validation, and regular auditing of their AI systems to maintain accuracy [12].

Automatic Scaling and Optimization

Predictive monitoring doesn’t stop at identifying potential failures - it also helps optimize resource allocation through automatic scaling. By forecasting resource needs, AI can ensure systems scale up during traffic surges and scale down during quieter periods, preventing bottlenecks and cutting unnecessary costs.

Take Microsoft Azure, for instance. It uses predictive monitoring to help businesses anticipate server loads during high-traffic events, like holiday shopping seasons, ensuring websites perform smoothly under pressure [12]. Similarly, Amazon applies predictive monitoring to its cloud-native microservices architecture, automatically adjusting resources based on expected demand. This ensures continuous performance even during spikes in activity [12].

AI tools also streamline issue resolution by detecting and addressing basic system problems with minimal human input. By analyzing large datasets in cloud environments, these tools can quickly pinpoint the root cause of potential failures, slashing resolution times and minimizing downtime [12].

The results speak for themselves. Forrester's 2024 State of DevOps Report found that organizations using AI in their DevOps pipelines reduced release cycles by an average of 67% [15]. Meanwhile, IBM’s 2024 DevSecOps Practices Survey revealed a 43% drop in production incidents caused by human error for teams leveraging AI-assisted operations [15]. And according to Deloitte's 2025 Technology Cost Survey, businesses implementing these tools saw a 31% reduction in total cost of ownership for enterprise applications, thanks to less downtime, better resource use, and fewer manual interventions [15].

Here’s how automatic scaling works: AI continuously monitors system metrics and uses historical patterns, seasonal trends, and real-time data to predict future resource demands. When it anticipates a spike in activity, the system automatically provisions additional resources to maintain performance. During slower periods, resources are scaled back to save costs while ensuring service quality remains intact. This dynamic approach keeps systems running efficiently, no matter the workload.

Automating Feedback Loops with AI

For years, traditional CI/CD pipelines have relied heavily on manual steps and static feedback, but that’s changing fast. AI is stepping in to create smarter systems that handle code integration, deployment, and routine reviews more efficiently. These systems also provide instant feedback on changes, making the entire process more seamless [16] [1]. This shift is catching on quickly - over 80% of tech professionals now incorporate AI tools into their workflows [19].

Google’s DORA report highlights that 75% of professionals are using AI for tasks like writing and documenting code, optimizing codebases, and decoding complex structures [19]. AI isn’t just about automation - it’s making pipelines smarter. It identifies inefficiencies, predicts potential failures, and provides actionable insights [1]. These capabilities allow pipelines to detect, analyze, and resolve build issues on their own, paving the way for faster, more reliable software deployments [19].

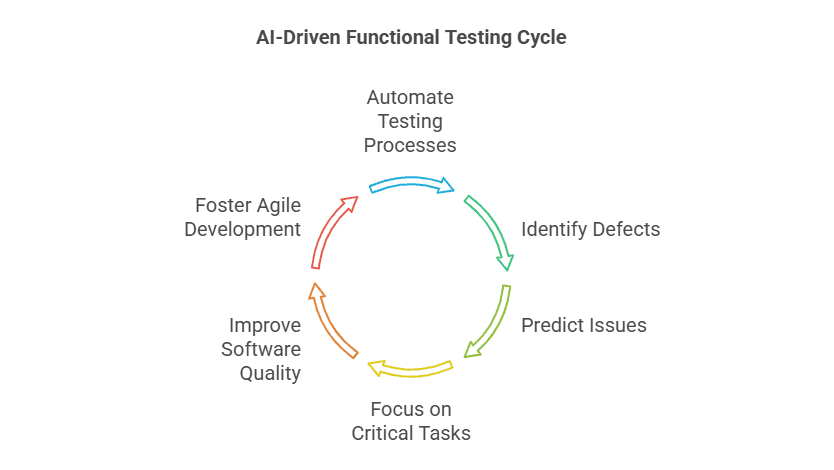

Self-Updating Test Suites

One of the standout advancements in AI-driven DevOps is self-updating test suites. These systems adapt to application changes without requiring manual updates. By leveraging machine learning, they review past data, spot trends, and even predict issues before they arise [22]. This creates a framework where test scripts automatically adjust to reflect changes in an application’s UI or API [23].

Here’s how it works: when a test script fails, AI kicks in to analyze the error, identify what changed, update the necessary parameters, and rerun the test [20]. For example, Google uses AI to detect UI changes that break test scripts and then fixes them automatically. This reduces the maintenance load on QA teams, letting them focus on more strategic testing efforts [23].

AI can also generate and prioritize test cases by analyzing user stories, code changes, and past defects [23]. A fintech company recently demonstrated this by using generative AI to create over 1,200 test scenarios for a payment gateway. By parsing Swagger documentation, they cut test design time by an impressive 70% [21]. Looking ahead, projections suggest that by 2025, AI will handle 80% of test maintenance tasks [21].

Platforms like Qodex are leading the charge in AI-powered testing. This tool scans repositories, discovers APIs, and generates detailed test suites - including unit, functional, regression, and even OWASP Top 10 security tests - using simple English commands. As products evolve, Qodex automatically updates its tests, keeping pace with rapid development cycles and easing the workload for development teams.

Deployment Validation with AI

AI doesn’t stop at testing - it’s also transforming post-deployment validation. After deployment, AI ensures applications meet the highest standards by catching issues that pre-production testing might have missed [26].

One way AI achieves this is through intelligent data workflows. These workflows clean data, detect anomalies, and validate data during deployment [25]. The impact is significant: they can cut data processing errors by up to 50%. Considering that bad data costs the U.S. economy about $3.1 trillion annually, this is a game-changer [25]. By embedding AI models into these workflows, organizations can maintain near real-time data quality, with systems learning from past adjustments to improve future performance [25].

AI’s predictive analytics further enhances reliability by anticipating data failures and enabling proactive fixes. For instance, by analyzing quarantined data that failed quality checks, AI can trace the root causes and address them early in the development cycle [25]. When integrated into CI/CD pipelines, this process automates repetitive tests, reduces human error, and ensures smoother deployments [24].

AI-powered tools like CRken also play a key role in deployment validation. By streamlining code reviews, CRken has been shown to reduce feature release times by up to 30% [18]. Incorporating AI into this phase not only saves time but also cuts down on unnecessary interventions, freeing up resources and enabling teams to focus on innovation [26].

AI-Powered API Testing and Security

As discussed earlier, AI is reshaping the landscape of anomaly detection and automated test updates. But its transformative impact goes beyond that - it’s revolutionizing API testing and security. APIs are the backbone of modern applications, and unfortunately, they’re also prime targets for attacks. In fact, by 2022, APIs were expected to become the most frequently attacked entry point for enterprise web applications [27]. This growing risk has pushed DevOps teams to adopt AI-powered tools that not only enhance security but also keep up with fast-paced development cycles.

Automated API Discovery

Cataloging every API endpoint by hand is tedious and prone to mistakes. AI-powered tools take this burden off developers by automatically scanning code repositories and mapping out APIs, including those that could easily be overlooked during manual reviews.

Take Qodex, for example. This platform scans repositories to identify all APIs in your codebase, creating a comprehensive inventory of your API environment. This is especially useful in microservices architectures, where endpoints are often scattered across multiple repositories and services. By automating the discovery process, teams save time, ensure accuracy, and gain a clear, real-time view of their API landscape, making it easier to spot potential security gaps.

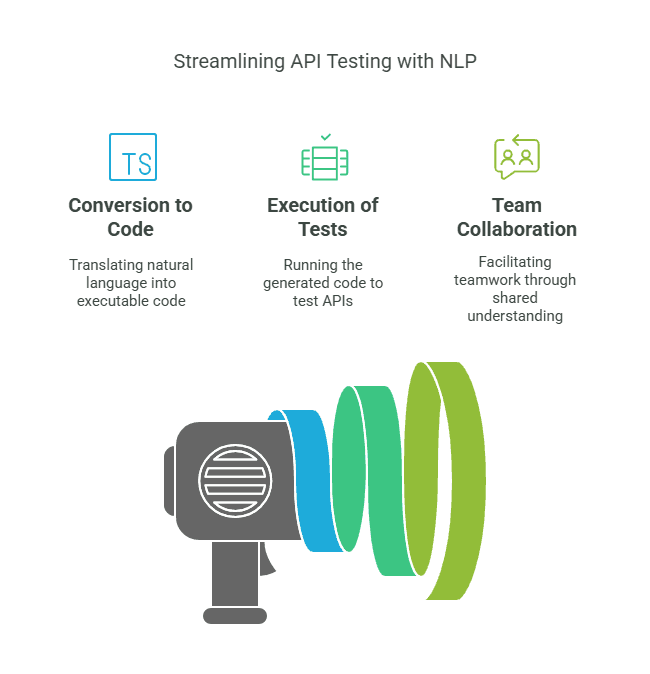

Natural Language Test Generation

Traditionally, writing API tests required significant technical expertise and time. AI flips this script by enabling developers to create sophisticated test scenarios using plain English, making API testing more accessible to a broader range of team members.

Using natural language processing, AI can automate the creation of test cases, even for edge conditions that might be missed in manual testing [28]. This automation not only reduces human error but also leads to more reliable results.

For instance, Qodex allows developers to describe tests in simple English, and the AI converts these descriptions into executable test cases. Whether it’s unit, functional, or regression tests, the platform handles it all. It even keeps tests up-to-date as your application evolves, streamlining testing cycles and cutting down on maintenance overhead [28]. This natural language interface works hand-in-hand with automated test updates, making DevOps workflows more efficient and less time-intensive.

Security Compliance Testing

Security testing is a crucial part of the DevOps process, and AI is stepping in to make it faster and more thorough. It can automatically generate and execute tests for vulnerabilities outlined in the OWASP Top 10 and other key security standards.

The global AI in cybersecurity market is projected to grow from $8.8 billion in 2019 to $38.2 billion by 2026, reflecting a compound annual growth rate of 23.3% [29]. This surge is fueled by AI’s ability to process massive data sets and detect complex attack patterns or subtle malicious activities [30]. Platforms like Qodex align with this trend by integrating OWASP Top 10 security tests into regular development workflows. This means developers can identify vulnerabilities like SQL injection, cross-site scripting, and authentication flaws without needing deep security expertise.

AI tools also provide continuous security monitoring, delivering real-time insights [29]. By identifying and addressing issues early in the development cycle, when fixes are cheaper and easier, these tools ensure every API is thoroughly vetted. While AI significantly enhances the efficiency and scope of security testing, human oversight remains critical for validating results and addressing unique or emerging threats.

Challenges in AI-Driven Monitoring

AI-driven monitoring comes with its share of hurdles, particularly when it comes to data quality and algorithm transparency. Let’s dive into these challenges and their impact.

Data Quality and Model Accuracy

Poor data quality is a costly problem, with organizations losing an average of $12.9 million annually because of it [31]. Alarmingly, 85% of AI initiatives fail due to bad data [35], and 87% of data science projects never make it to production for similar reasons [34].

In the context of DevOps monitoring, data quality refers to the accuracy, completeness, and compliance of data with privacy, ethical, and security standards [31]. Common pitfalls include incomplete datasets, bias, siloed information, and inconsistencies [31]. A lack of historical data also poses a challenge, especially for new applications or testing environments with limited data to analyze. Privacy regulations further restrict access to data, making it even harder to build reliable models [32]. Without high-quality data, AI models can generate flawed predictions, misclassify issues, and disrupt workflows [32].

To tackle these issues, organizations need to implement strong data governance practices. Automated tools for data cleaning, profiling, and real-time alerts can help maintain quality [31][33]. Using data quality scorecards to measure completeness and accuracy provides teams with actionable metrics to improve their datasets.

Algorithm Transparency and Trust

Transparency is another critical challenge for AI systems. Trust in AI monitoring hinges on understanding how and why decisions are made. Unfortunately, many AI algorithms function as "black boxes", making it hard for teams to decipher why specific alerts are triggered or recommendations are provided. This lack of clarity has real consequences - 75% of businesses believe it could drive customer churn [36], and 65% of CX leaders see AI as essential to their strategies [36].

"Being transparent about the data that drives AI models and their decisions will be a defining element in building and maintaining trust with customers." - Zendesk CX Trends Report 2024 [36]

Transparency in AI requires explainability, interpretability, and accountability [36]. For DevOps teams, this means understanding not just what the AI detects but also why it flagged an issue and what data influenced its decision. Without this clarity, AI models can produce false positives, false negatives, or inconsistent results, leading to workflow disruptions and eroding trust [32].

Organizations can address these concerns by documenting the decision-making processes behind their AI systems [37]. Establishing clear oversight and accountability for AI-driven processes is equally important [3]. Some companies are forming AI Ethics and Compliance Committees to ensure their models align with organizational values and legal standards [38].

Routine audits and ongoing evaluations of AI systems are crucial for addressing ethical concerns [39][40]. Companies like OpenAI set an example by publishing research and detailed documentation on their AI practices, fostering transparency [36].

Finally, improving data literacy across all stakeholders is key [33]. This involves training DevOps teams and communicating the strengths and limitations of AI systems [4]. By doing so, organizations can build trust and ensure their AI tools are used effectively.

The Future of AI in DevOps Monitoring

AI is reshaping DevOps monitoring in ways that are both exciting and transformative. Projections show the market for Generative AI in DevOps skyrocketing from $1.87 billion in 2024 to $9.58 billion by 2029, and an impressive $47.3 billion by 2034 [42]. By 2027, it's estimated that 70% of DevOps pipelines will incorporate AI-driven processes [2].

"AI is redefining DevOps, turning once-manual processes into intelligent, self-optimizing systems. With predictive analytics, automated debugging, and real-time insights, teams can shift from firefighting issues to driving innovation." – Hyperight [1]

The Rise of Self-Optimizing Systems

AI tools are no longer just about simple automation - they're morphing into autonomous systems that can manage entire software lifecycles with minimal human input [44]. These systems don’t just fix problems; they anticipate them, solve them proactively, and continuously improve by analyzing both historical and real-time data. This shift builds on earlier advances in predictive analytics and automated monitoring.

Even better, the cost of using AI for these tasks is plummeting. AI inference costs have been dropping by about 10x year-over-year, making high-end tools more accessible [41]. For example, in November 2024, Llama-3.2B delivered GPT-3-level performance for just $0.06 per million tokens - a staggering 1,000x cost reduction over three years [41].

Real-World Applications of AI in DevOps

Some of the most forward-thinking organizations are already showcasing how AI can transform DevOps:

GitHub: Uses AI-powered bots for real-time code reviews and error detection, speeding up development cycles [44].

NASA: Relies on AI to continuously monitor mission-critical systems, with automated anomaly detection providing instant alerts [44].

Facebook: Employs predictive AI to detect issues like database slowdowns, automatically adjusting workloads to maintain performance [44].

These examples highlight how AI is enabling faster, smarter, and more reliable software development and operations.

Enhanced Collaboration and Security

AI isn't just automating tasks - it’s fostering better collaboration and strengthening security. Explainable AI is making decision-making processes more transparent, while AI-human collaboration tools are boosting team productivity [17]. On the security front, AI-driven systems are seamlessly integrating into DevSecOps workflows, identifying abnormal usage patterns and preventing unauthorized access [1].

Specialized Tools and Hyperautomation

The emergence of specialized platforms like Qodex is pushing automation to the next level. Qodex, for instance, can scan repositories, discover APIs, and generate a full suite of tests - including unit, functional, regression, and OWASP Top 10 security tests - all through plain English commands. This kind of intelligent automation is redefining how DevOps teams handle complex workflows.

Hyperautomation is another key trend. By integrating AI across all phases of DevOps, from anomaly detection to feedback loops, teams are adopting event-driven pipelines and progressive delivery methods. These approaches are setting new benchmarks for CI/CD, while platform engineering is enabling self-service DevOps environments [43]. AI agents are also becoming more advanced, incorporating features like reasoning, planning, multi-agent collaboration, and memory management to tackle increasingly complex workflows [41].

Shifting Roles and Strategic Focus

As AI takes over routine tasks like monitoring and testing, DevOps teams are shifting their focus to more strategic and innovative work [17]. AI-assisted code reviews are improving code quality, while AI-based infrastructure management is optimizing resource allocation. This evolution allows professionals to prioritize innovation and long-term planning rather than getting bogged down in day-to-day firefighting.

Building a Future-Ready DevOps Strategy

The key to success lies in starting small, iterating, and involving stakeholders at every step. Transparency and accountability are critical to ensuring trust in AI-driven processes [3]. The future of DevOps monitoring isn’t just about better tools - it’s about rethinking how software is built, deployed, and maintained in an era of intelligent automation. AI is not just a tool for efficiency; it's a catalyst for reimagining the entire software development lifecycle.

Frequently Asked Questions

Why should you choose Qodex.ai?

Qodex.ai simplifies and accelerates the API testing process by leveraging AI-powered tools and automation. Here's why it stands out:

- AI-Powered Automation

Achieve 100% API testing automation without writing a single line of code. Qodex.ai’s cutting-edge AI reduces manual effort, delivering unmatched efficiency and precision.

- User-Friendly Platform

Effortlessly import API collections from Postman, Swagger, or application logs and begin testing in minutes. No steep learning curves or technical expertise required.

- Customizable Test Scenarios

Whether you’re using AI-assisted test generation or creating test cases manually, Qodex.ai adapts to your needs. Build robust scenarios tailored to your project requirements.

- Real-Time Monitoring and Reporting

Gain instant insights into API health, test success rates, and performance metrics. Our integrated dashboards ensure you’re always in control, identifying and addressing issues early.

- Scalable Collaboration Tools

Designed for teams of all sizes, Qodex.ai offers test plans, suites, and documentation that foster seamless collaboration. Perfect for startups, enterprises, and microservices architecture.

- Cost and Time Efficiency

Save time and resources by eliminating manual testing overhead. With Qodex.ai’s automation, you can focus on innovation while cutting operational costs.

- Continuous Integration/Delivery (CI/CD) Compatibility

Easily integrate Qodex.ai into your CI/CD pipelines to ensure consistent, automated testing throughout your development lifecycle.

How can I validate an email address using Python regex?

You can use the following regex pattern to validate an email address: ^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$

What is Go Regex Tester?

Go Regex Tester is a specialized tool for developers to test and debug regular expressions in the Go programming environment. It offers real-time evaluation of regex patterns, aiding in efficient pattern development and troubleshooting

Discover, Test, & Secure your APIs 10x Faster than before

Auto-discover every endpoint, generate functional & security tests (OWASP Top 10), auto-heal as code changes, and run in CI/CD - no code needed.

Related Blogs